Setting up a local Apache Kafka instance for testing

I recently started digging into Apache Kafka for a project I’m working on at work. The more I started looking into it I realized I need to get myself a local Kafka cluster to play around with. This article walks you through that process and hopefully save your some time 😄.

If you don’t know what Kafka is, here’s a great video from Confluent.

You could also check out my blog post to understand the main concepts of Kafka if you are interested from here:

💡 You can follow along by cloning the repo I have created over here.

Installing the binaries

There’s a quickstart guide over at the official docs. To be honest, I didn’t want to install anything locally in order to keep the Kafka instance fairly separate my dev environment, hence went with the Docker way which you will find below.

The Docker way

This is by far the easiest I have found and saves you ton of time. Once you have cloned the repo just spin it up!

docker-compose up -dIf you want to know what’s going on in here let’s take a look at the docker-compose.yaml file.

---

version: '3'

services:

zookeeper:

image: confluentinc/cp-zookeeper:7.0.1

container_name: zookeeper

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

broker:

image: confluentinc/cp-kafka:7.0.1

container_name: broker

ports:

# To learn about configuring Kafka for access across networks see

# https://www.confluent.io/blog/kafka-client-cannot-connect-to-broker-on-aws-on-docker-etc/

- "9092:9092"

depends_on:

- zookeeper

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: 'zookeeper:2181'

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092,PLAINTEXT_INTERNAL://broker:29092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1- We spin up a Zookeeper instance at port

2181internally within the Docker network (i.e. not accessible from the host) - Next we spin up our broker which is the Kafka instance which has a dependency on Zookeeper. This is exposed at port

9092and accessible from the host.

Everything else is set to default. If you want to learn what each environment variable does, head over here. You can add multiple brokers (Kafka instances) if you want in the same docker-compose file.

This approach is quite beneficial if you want to stand up an instance for testing on the go or even in a CI environment.

Interacting with the cluster

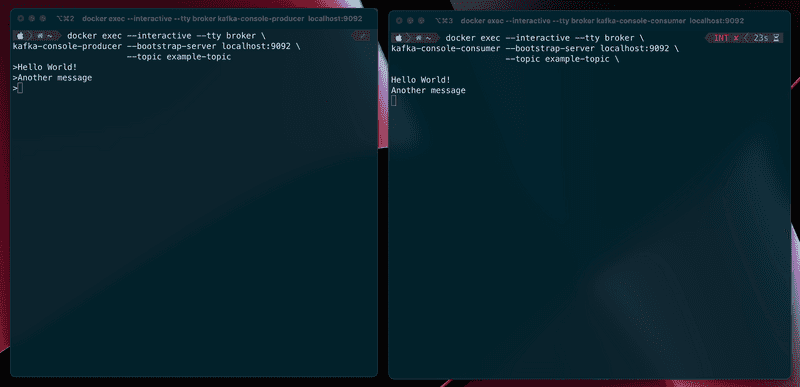

Before we publish anything, let’s spin up a consumer so that we know our setup is working. To do that, run the following command from the CLI.

docker exec --interactive --tty broker \

kafka-console-consumer --bootstrap-server broker:9092 \

--topic example-topic \

--from-beginningNow let’s create some messages and see them in action!

docker exec --interactive --tty broker \

kafka-console-producer --bootstrap-server localhost:9092 \

--topic example-topicThis is what it will look like from the terminal. To your left is the producer and consumer to your right hand side.

A GUI perhaps?

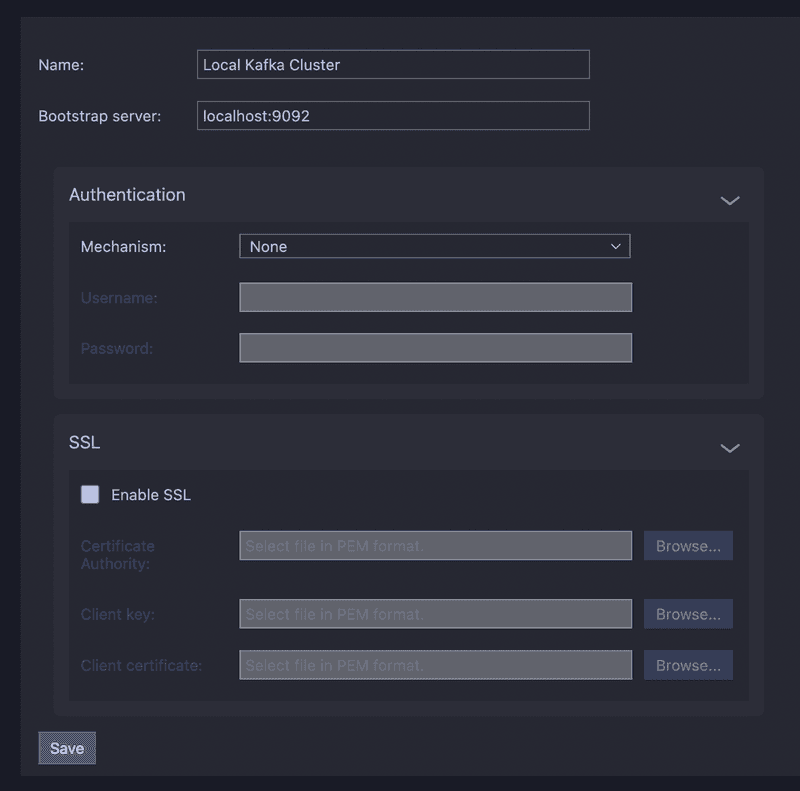

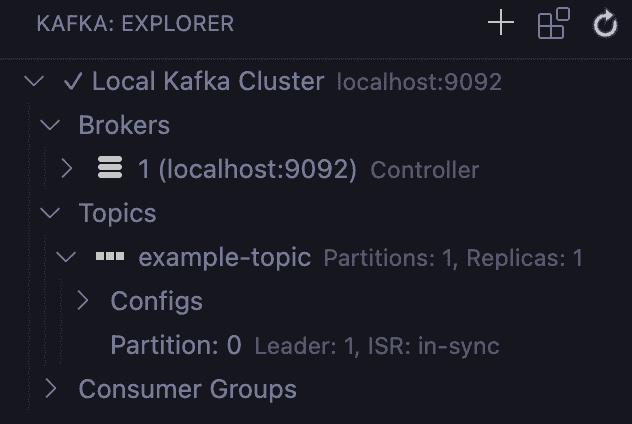

Not a fan of the CLI? There’s many tools out there. I wanted to find a nice, lightweight, OSS solution for this. I decided to go ahead with Tools for Apache Kafka for VS Code.

Once you have that going you could interact with it from the side bar. There will be a new icon with Kafka’s logo on it!

Here’s what it looks like when it finds your instance.

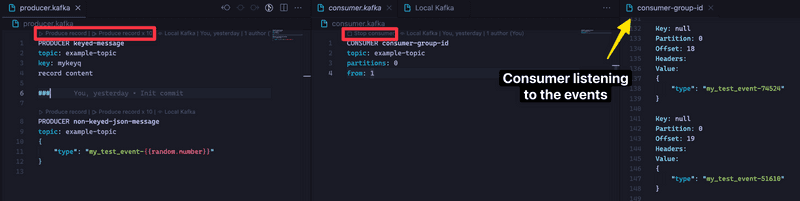

PRODUCER keyed-message

topic: example-topic

key: mykeyq

record content

###

PRODUCER non-keyed-json-message

topic: example-topic

{

"type": "my_test_event-{{random.number}}"

}CONSUMER consumer-group-id

topic: example-topic

partitions: 0

from: 1You can invoke the producers and consumers right within VS Code, which is pretty easy and cool!

Conclusion

Welll that’s it! Standing up a docker container certainly did save me some time just for testing purposes. Hope you enjoyed this post and see you next time 👋