Simple In-Memory Caching in .Net Core with IMemoryCache

Caching is the process of storing the data that’s frequently used so that data can be served faster for any future requests.

💡 UPDATE on March 2023: I have recently migrated this project to .NET 7 and refactored the code to make it more readable. You can find the previous updates at the bottom of this post.

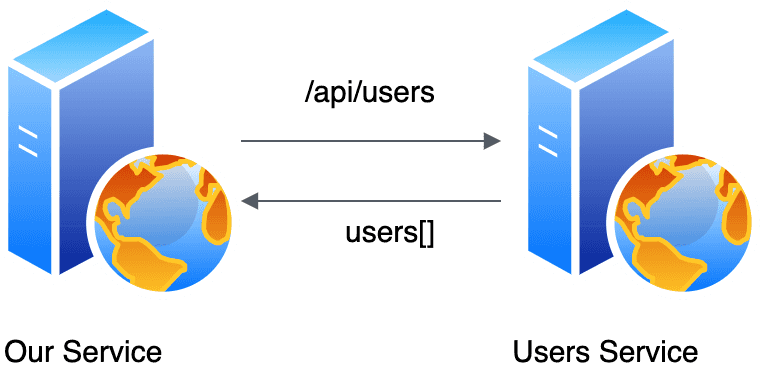

Suppose we have a very lightweight process which talks to another server whose data is not going to change frequently; “Our service” and “Users Service” (which returns an array of users) respectively.

Without any caching in place, we would be making multiple requests which will ultimately result in timeouts or making the remote server unnecessarily busy.

Walkthrough video

If you like to watch a video walkthrough instead of this article with a thorough explanation, you can follow along on my Youtube channel too 😊

Introduction to IMemoryCache

Let’s have a look at how we can improve the performance of these requests by using a simple caching implementation. .NET Core provides 2 cache implementations under Microsoft.Extensions.Caching.Memory library:

- IMemoryCache - Simplest form of cache which utilises the memory of the web server.

- IDistributedCache - Usually used if you have a server farm with multiple app servers.

In this example we will be using the IMemoryCache along with latest version of .NET (Core) as of yet, which is version 7.

These are the steps we are going to follow:

- Create/Clone a sample .NET Core app

- Naive implementation

- Refactoring our code to use locking

1. Create/Clone a sample .NET Core app

You can simply clone In-memory cache sample code repo I have made for the post. If not, make sure that you scaffold a new ASP.NET Core MVC app to follow along.

First, we need to inject the in-memory caching service into the constructor of the HomeController

public class HomeController : Controller

{

private readonly IMemoryCache _cache;

public HomeController(ILogger<HomeController> logger, IMemoryCache memoryCache)

{

_cache = memoryCache;

}Note: With .Net Core 3.1 you don’t need to specifically register the memory caching service. However, if you are using a prior version such as 2.1, then you will need to add the following line in the Startups.cs:

public class Startup

{

public void ConfigureServices(IServiceCollection services)

{

services.AddMemoryCache(); // Add this line for .NET 2.1

services.AddMvc().SetCompatibilityVersion(CompatibilityVersion.Version_2_1);

}

}2. Naive implementation

For the sake of this tutorial, we’ll use a free external API such as reqres.in/api/users. Let’s imagine we want to cache the response of the API. For simplicity, I have used the example code provided by Microsoft Docs.

// Code removed for brevity

...

// Look for cache key.

if (!_cache.TryGetValue(CacheKeys.Entry, out cacheEntry))

{

// Key not in cache, so get data.

cacheEntry = DateTime.Now;

// Set cache options.

var cacheEntryOptions = new MemoryCacheEntryOptions()

// Keep in cache for this time, reset time if accessed.

.SetSlidingExpiration(TimeSpan.FromSeconds(3));

// Save data in cache.

_cache.Set(CacheKeys.Entry, cacheEntry, cacheEntryOptions);

}

Explanation

The code is pretty straightforward. We first check whether we have the value for the given key present in our in-memory cache store. If not, we do the request to get the data and store in our cache. What SetSlidingExpiration does is that as long as no one accesses the cache value, it will eventually get deleted after 10 seconds. But if someone accesses it, the expiration will get renewed.

Suppose we want to get a list of users as per our use case. Here’s my implementation with a bit of code re-structuring:

var users = _cacheProvider.GetFromCache<IEnumerable<User>>(cacheKey);

if (users != null) return users;

// Key not in cache, so get data.

users = await func();

var cacheEntryOptions = new MemoryCacheEntryOptions()

.SetSlidingExpiration(TimeSpan.FromSeconds(10));

_cacheProvider.SetCache(cacheKey, users, cacheEntryOptions);

return users;await func() will wait and return the response from our external API endpoint (provided that it’s passed into our method) so that we can use that value to store in our cache.

Have a look at the full implementation of my GetCachedResponse() in CachedUserService.cs for a more generic solution to handle any type of data.

This gets the job done for a very simple workload. But how can we make this more reliable if there are multiple threads accessing our cache store? Let’s have a look in our next step.

3. Refactoring our code to use locking

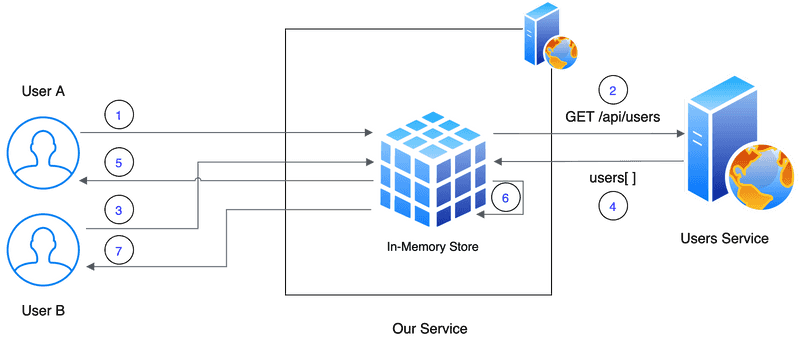

Now, let’s assume that we have several users accessing our service which means there could be multiple requests accessing our in-memory cache. One way to make sure that no two different users get different results is by utilising .Net locks. Refer the following scenario:

💡 Note: I need to mention that the IMemoryCache is thread-safe by design. However, if you are using doing computationally heavy task when creating the initial cache entry it’s advisable to use a Semaphore to synchronize the threads accessing the same logic, as shown in my examples.

Let’s breakdown the sequence of requests and responses:

- User A makes a request to our web service

- In-memory cache doesn’t have a value in place, it enters in to lock state and makes a request to the Users Service

- User B makes a request to our web service and waits till the lock is released

- This way, we can reduce the number of calls being made to the external web service. returns the response to our web service and the value is cached

- Lock is released, User A gets the response

- User B enters the lock and the cache provides the value (as long it’s not expired)

- User B gets the response

The above depiction is a very high-level abstraction over all the awesome stuff that happens under the covers. Please use this as a guide only. Let’s implement this!

...

var semaphore = new SemaphoreSlim(1, 1);

var users = _cacheProvider.GetFromCache<IEnumerable<User>>(cacheKey);

if (users != null) return users;

try

{

await semaphore.WaitAsync();

// Recheck to make sure it didn't populate before entering semaphore

users = _cacheProvider.GetFromCache<IEnumerable<User>>(cacheKey);

if (users != null) return users;

users = await func();

var cacheEntryOptions = new MemoryCacheEntryOptions()

.SetSlidingExpiration(TimeSpan.FromSeconds(10));

_cacheProvider.SetCache(cacheKey, users, cacheEntryOptions);

}

finally

{

// It's important to do this, otherwise we'll be locked forever

semaphore.Release();

}

return users;

Explanation

Same as in our previous example we first check our cache for the presence of the value for a key provided. if not, we then asynchronously wait to enter the Semaphore. Once our thread has been granted access to the Semaphore, we recheck if the value has been populated previously for safety. If we still don’t have a value, we then call our external service and store the value in the cache.

Have a look at the CachedUserService.cs for the full implementation.

Demo

Hope you enjoyed this tutorial. Happy to know your thoughts! 🙂

Updates

💡 UPDATE on Aug 2022: I have recently migrated this project to .NET 6 also with its minimal hosting model 🎉. You can still access the .NET Core 3.1 version from this branch.

💡 UPDATE on Feb 2021: Head over to my blog post on IDistributedCache if you are interested to see how to integrate with Redis as a distributed caching solution.